We humans have been captivated by human behaviour possibly since the beginning of time. What drives our behaviour? And how have our habits and way of life altered throughout time? It is a subject that has received a lot of questions and interest. One of the main elements to gain insights on human behaviour is through facial expressions and the emotions they exhibit. Facial expressions are the key to understanding human emotions and how we react to products and services. Only one-third of the emotions we perceive come from words and voice tones, the remaining two-thirds are derived from facial expressions. By leveraging AI capabilities to recognize our expression, emotions, businesses and researchers can understand how people react to certain stimulus and up the ante of Human-Machine interaction.

Expression analysis has a plethora of use cases in industries:

- Hospitality

- Education

- Market Research

- Smart Cities

- Healthcare

- Retail

- Entertainment

How does Emotion analysis work?

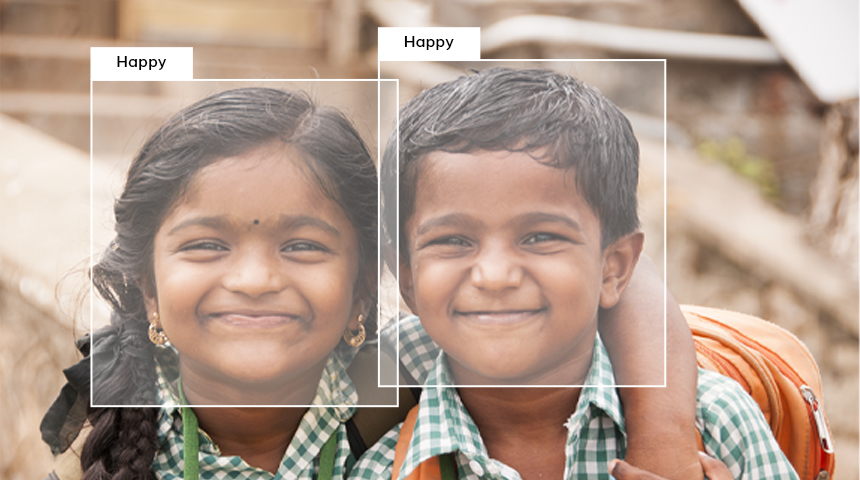

Emotion analysis is the combination of both Artificial intelligence capabilities and the understanding of psychology. Facial expression recognition is a three-step process:

- Face detection – AI-based technology that identifies human faces from video feeds/images. It recognises and tracks the position and motion of various facial features, such as the eye brows, lips, eyes, mouth, and others.

- Expression analysis – The AI based deep learning model for expression recognition analyses the perceived expressions

- Expression classification – The AI model recognizes the expressions and compares them to the labelled data and finally classifies into emotions.

There are 7 main basic emotions that a human exhibits through facial expressions:

- Happiness

- Surprise

- Sadness

- Anger

- Fear

- Disgust

- Neutral

Each emotion is represented by a variety of different positions and facial movement patterns. Take Happy for example, A smile, a wrinkle in cheeks and squinted eyes are a sign of a happy emotion

The Technology

There is no unified approach to emotion identification in video and photos. For their tasks, developers either choose the best technology or devise a novel strategy. The approaches are based on two main AI subsets – Machine Learning and Deep learning. These algorithms when compiled with Computer Vision technologies help us recognize the emotions of a person in real time from videos as well as photos.

Conclusion

“Enabling Cameras to visualize our world”

For as long as we know, humans have been capable of recognizing expressions and emotions of people around them up to a certain extent. Nevertheless, use of technology to recognize and perceive emotions has been unheard of. Popular and promising, emotion recognition has the potential to greatly simplify a variety of processes. The use of this technology can be of substantial benefit to businesses and service providers. An Extensive understanding of human psychology and deep skill in AI development are both necessary for creating facial emotion recognition software. Hence these models are trained continuously with more data to gain greater accuracy in the numerous industries it can benefit.